Google's "Nano Banana": Deconstructing the AI That Revolutionized Photo Editing

Peyara Nando

Dev

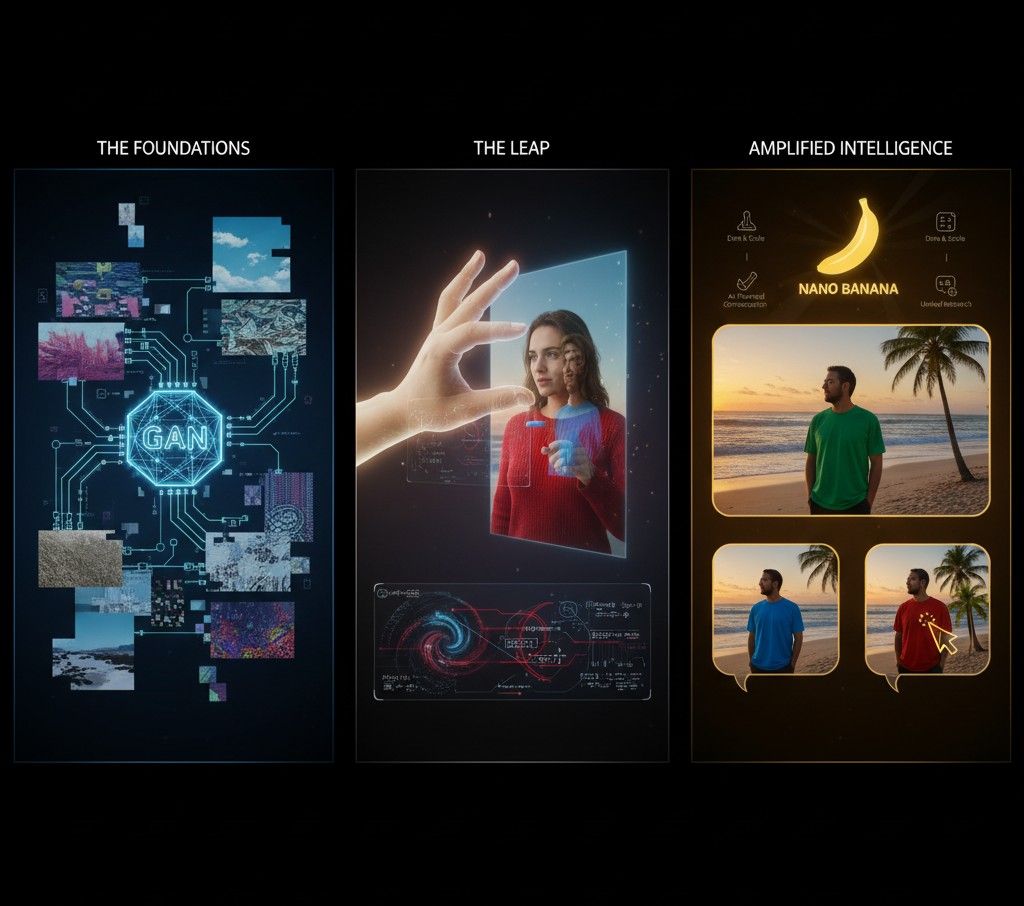

We all want that magic button: change your sweater to blue, make that background look like a serene beach, fix that lighting issue with a word. Google's "Nano Banana," a mysterious AI that dominated anonymous editing leaderboards, appears to have cracked the code. It can perform edits with an astonishing level of precision and consistency that feels like magic. But how did this digital sorcerer come to be? It’s not just about having a big brain; it's about standing on the shoulders of giants, taking key research breakthroughs, and amplifying them with Google's unparalleled scale.

The Quest Begins: From Pixel Patching to the Promise of Language

The dream of effortless image editing began with early computer vision techniques from the late 1990s and early 2000s. Papers like Efros and Leung's 1999 "Texture Synthesis" showed AI could replicate patterns, while Bertalmio's 2000 "Image Inpainting" demonstrated computational art restoration. Criminisi's 2004 work unified these, creating "exemplar-based" editing that could intelligently fill gaps. These were the foundational building blocks, teaching AI the basic rules of visual coherence.

Then came the deep learning revolution, spearheaded by Ian Goodfellow's 2014 Generative Adversarial Networks (GANs). By pitting AI models against each other, GANs learned to generate incredibly realistic images. Pathak's 2016 "Context Encoders" showed GANs could even understand semantics by filling in missing image parts, hinting at a future where AI could "understand" what was in a photo.

The First Big Leap: InstructPix2Pix and the Birth of Conversational Editing

The true turning point, the spark that ignited the modern era of AI editing, was Tim Brooks' 2022 InstructPix2Pix research. This paper was a revelation because it proposed a way to edit images using natural language. The team ingeniously combined GPT-3's language prowess with diffusion models (which were starting to solve GANs' instability problems, as seen in models like RePaint and GLIDE). They generated hundreds of thousands of synthetic training examples.An AI-created textbook of "before and after" edits based on simple text commands.

This was the democratization moment: users could finally "ask" their photos to change. But InstructPix2Pix, for all its brilliance, wrestled with a crucial issue: consistency. Ask it to change your sweater, and it might accidentally alter your face or background. It was a major step forward, but the AI lacked the nuanced understanding to leave other elements untouched.

The "Consistency Problem" and the Need for Precision

The core challenge for AI editors like InstructPix2Pix was maintaining identity preservation and overall semantic integrity. If you ask the model to change a smile, you don't want the entire facial structure to warp. If you ask the model to add snow, you don't want the lighting and shadows to remain unchanged, creating a visually jarring effect.

This is where a flurry of focused research started to tackle these specific problems:

- Precision Control via Attention: Papers like "Prompt-to-Prompt Image Editing with Cross Attention Control" (Hertz et al., 2022) discovered that the "attention layers" within diffusion models – the parts that help the AI focus on specific words and image regions – were key. By manipulating these attention maps, researchers found they could guide edits with unprecedented precision, directly influencing where and how a change occurred. This was crucial for localized modifications without unintended side effects.

- Identity Preservation: For tasks like facial editing, maintaining a person's core identity is paramount. Research like "InstaFace: Identity-Preserving Facial Editing" (2025) and "DreamIdentity: Enhanced Editability for Efficient Face-Identity Preserved Image Generation" (2024) began developing specialized methods. These often involved learning specific feature embeddings from facial recognition models or using dedicated encoders to ensure that, no matter the edit, the subject's fundamental identity remained intact.

- Maintaining Structure and Semantics: Other papers tackled the broader "consistency problem." Research into "Consistent Image Layout Editing" (2025) explored how to modify image layouts while preserving visual appearances, and approaches like "Inverse-and-Edit: Effective and Fast Image Editing by Cycle Consistency Models" (2025) improved the process of getting an image into an editable format while ensuring fidelity to the original.

Google's "Nano Banana": Standing on the Giants' Shoulders, Amplified

This is where Google's "Nano Banana" (internally Gemini 2.5 Flash Image) likely enters the picture, not as a lone innovator, but as a masterful synthesizer and amplifier of these existing breakthroughs, supercharged by its unique advantages:

- Leveraging Attention Control: Nano Banana almost certainly harnesses the principles discovered in papers like Hertz et al.'s to achieve its precise, localized edits. By fine-tuning attention mechanisms, it can understand your request ("make the sweater blue") and direct the diffusion process precisely to that region, leaving other parts untouched.

- Mastering Identity and Consistency: The paper's ability to preserve identity and scene context suggests Google has deeply integrated the lessons from identity-preserving research. It’s likely using advanced techniques to encode and protect the subject's core features, perhaps inspired by methods like InstaFace, but scaled and refined.

- Unparalleled Data and Computing: While papers like InstructPix2Pix relied on synthetic data, Google's access to billions of real-world user interactions with photos is a game-changer. Training on how real people actually describe edits, the nuances, the common requests, the subtle visual cues they respond to is invaluable. This "real-world learning" likely gives Nano Banana a profound semantic understanding that synthetic data alone can't replicate. Combined with immense computing power, this allows for training models that can execute these complex instructions with remarkable consistency.

- Conversational Refinement: The ability to handle follow-up commands ("actually, make it blue") points to robust conversational memory, likely built upon research into "Multi-turn Consistent Image Editing," ensuring that each edit builds logically and coherently on the last.

In essence, Nano Banana didn't invent these concepts in a vacuum. It took the foundational ideas of instruction-based editing, the precise control offered by attention, the critical need for identity preservation, and the general challenge of consistency, and then applied Google's massive resources to push them to an unprecedented level. It’s the culmination of years of research, distilled into an AI that feels less like a tool and more like a collaborative artist. The "mystery" wasn't in its existence, but in how it managed to so flawlessly execute what countless researchers had been striving towards for years.