Fine-tuning at Intuit Biztec: Unleashing the Power of Specialized AI

Peyara Nando

Dev

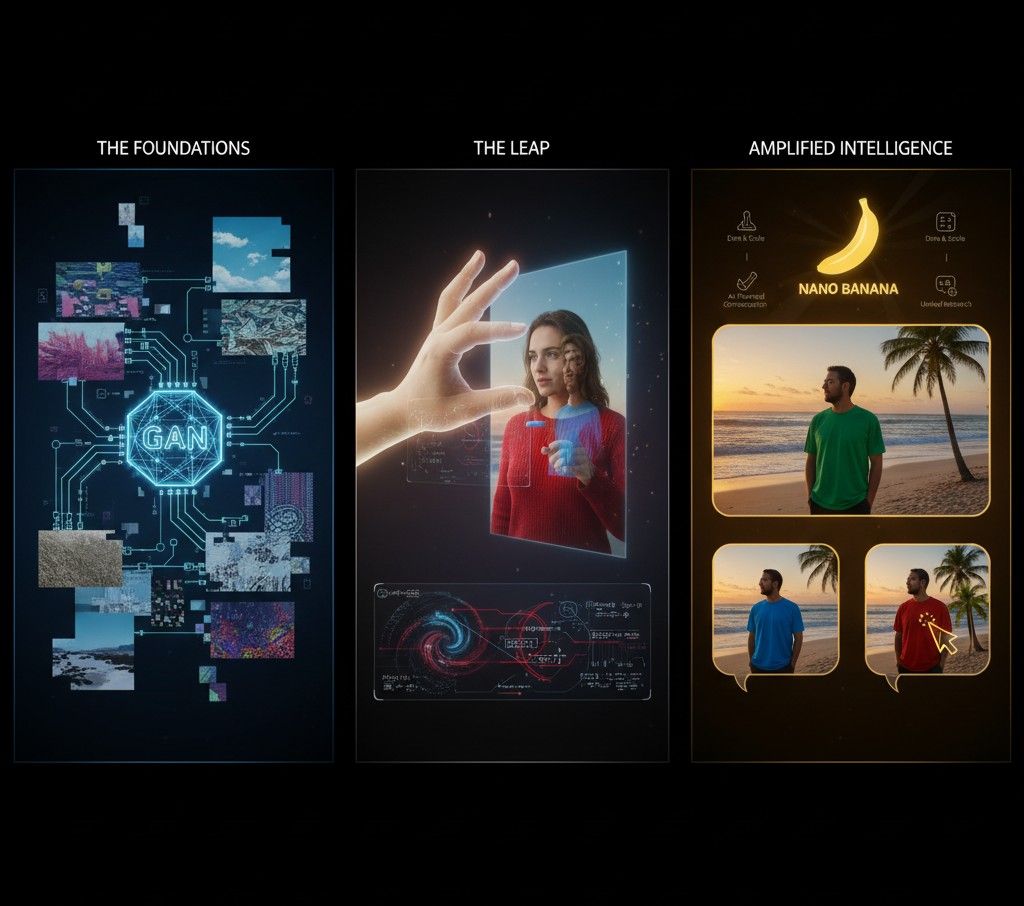

Large language models (LLMs) like Llama 3.3 are impressive generalists. They can perform a wide range of tasks, from mathematical calculations and career planning to debugging code. Think of them as a "jack of all trades," possessing a broad understanding of various subjects. However, this breadth comes at a cost. When faced with complex, nuanced problems, these general-purpose models often struggle, losing context and failing to deliver accurate or relevant results. Their general knowledge isn't sufficient for the intricacies of specific workflows.

At Intuit Biztec, we're addressing this limitation by fine-tuning open-source LLMs like Llama 3.3. This process involves taking the pre-trained general model and exposing it to our specific workflows and datasets. Through backpropagation, a key machine learning technique, we retrain the model, subtly adjusting its internal parameters to optimize it for our unique use cases. Essentially, we're transforming the "jack of all trades" into a specialized expert.

Imagine training a musician. A general music education provides a foundation, but specialized training in a particular instrument or genre is what unlocks true mastery. Fine-tuning acts similarly, taking the general knowledge of the LLM and refining it for specific tasks. This leads to significantly improved performance and accuracy within our target domains.

The benefits of fine-tuning extend beyond just improved accuracy. We've achieved two remarkable additional enhancements:

- Extended Context Length: One of the key limitations of LLMs is their context window – the amount of text they can "remember" and consider at any given time. By fine-tuning Llama 3.3, we've dramatically increased its context length from 128,000 tokens to a staggering 342,000 tokens. This expanded context allows the model to process and understand much longer and more complex pieces of information, leading to more coherent and insightful responses. Think of it as significantly expanding the model's short-term memory, enabling it to grasp the nuances of longer conversations, documents, and codebases.

-Reduced VRAM Consumption: Fine-tuning has also yielded significant improvements in efficiency. We've managed to reduce the Video RAM (VRAM) consumption of the model by at least 75%. This is a crucial advantage, as it makes the model more accessible and deployable on a wider range of hardware, including systems with limited resources. Lower VRAM requirements translate to lower operational costs and a smaller environmental footprint.

With these abilities you can now reduce the OPeX of hosting a model on-premises and be in line with sovereignty laws. Contact us to see exciting use cases.

This was done by using the following papers:

https://github.com/apple/ml-cross-entropy

https://arxiv.org/pdf/2411.09009